Introduction

Perhaps there might be scenarios in which one prefers to use a

parametric test vs the non-parametric bootstrap-based resampling method

to calculate AE under-reporting. simaerep provides a

function that does bootstrap resampling of the entire study preserving

the actual site parameters such as number of patients and

visit_med75.

Using this pool we can check whether the p-values calculated by

poisson.test() represent accurate probabilities.

Test Data and Standard Processing

We simulate three studies with varying ae per visit rate and then use all functions we already introduced.

df_visit1 <- sim_test_data_study(

n_pat = 1000,

n_sites = 100,

frac_site_with_ur = 0.2,

ur_rate = 0.5,

max_visit_mean = 20,

max_visit_sd = 4,

ae_per_visit_mean = 0.5

)

df_visit1$study_id <- "ae_per_visit: 0.5"

df_visit2 <- sim_test_data_study(

n_pat = 1000,

n_sites = 100,

frac_site_with_ur = 0.2,

ur_rate = 0.5,

max_visit_mean = 20,

max_visit_sd = 4,

ae_per_visit_mean = 0.2

)

df_visit2$study_id <- "ae_per_visit: 0.2"

df_visit3 <- sim_test_data_study(

n_pat = 1000,

n_sites = 100,

frac_site_with_ur = 0.2,

ur_rate = 0.5,

max_visit_mean = 20,

max_visit_sd = 4,

ae_per_visit_mean = 0.05

)

df_visit3$study_id <- "ae_per_visit: 0.05"

df_visit <- bind_rows(df_visit1, df_visit2, df_visit3)

df_site <- site_aggr(df_visit)

df_sim_sites <- sim_sites(df_site, df_visit)

df_visit## # A tibble: 58,505 × 9

## patnum site_number is_ur max_visit_mean max_visit_sd ae_per_visit_mean visit

## <chr> <chr> <lgl> <dbl> <dbl> <dbl> <int>

## 1 P000001 S0001 TRUE 20 4 0.25 1

## 2 P000001 S0001 TRUE 20 4 0.25 2

## 3 P000001 S0001 TRUE 20 4 0.25 3

## 4 P000001 S0001 TRUE 20 4 0.25 4

## 5 P000001 S0001 TRUE 20 4 0.25 5

## 6 P000001 S0001 TRUE 20 4 0.25 6

## 7 P000001 S0001 TRUE 20 4 0.25 7

## 8 P000001 S0001 TRUE 20 4 0.25 8

## 9 P000001 S0001 TRUE 20 4 0.25 9

## 10 P000001 S0001 TRUE 20 4 0.25 10

## # ℹ 58,495 more rows

## # ℹ 2 more variables: n_ae <int>, study_id <chr>

df_site## # A tibble: 300 × 6

## study_id site_number n_pat n_pat_with_med75 visit_med75 mean_ae_site_med75

## <chr> <chr> <int> <dbl> <dbl> <dbl>

## 1 ae_per_vis… S0001 10 10 15 0.3

## 2 ae_per_vis… S0002 10 9 15 0.667

## 3 ae_per_vis… S0003 10 8 17 0.375

## 4 ae_per_vis… S0004 10 10 15 0.3

## 5 ae_per_vis… S0005 10 9 18 0.222

## 6 ae_per_vis… S0006 10 9 16 0.556

## 7 ae_per_vis… S0007 10 9 17 0.667

## 8 ae_per_vis… S0008 10 9 16 0.333

## 9 ae_per_vis… S0009 10 10 14 0.3

## 10 ae_per_vis… S0010 10 10 17 0.2

## # ℹ 290 more rows

df_sim_sites## # A tibble: 300 × 10

## study_id site_number n_pat n_pat_with_med75 visit_med75 mean_ae_site_med75

## <chr> <chr> <int> <dbl> <dbl> <dbl>

## 1 ae_per_vis… S0001 10 10 15 0.3

## 2 ae_per_vis… S0002 10 9 15 0.667

## 3 ae_per_vis… S0003 10 8 17 0.375

## 4 ae_per_vis… S0004 10 10 15 0.3

## 5 ae_per_vis… S0005 10 9 18 0.222

## 6 ae_per_vis… S0006 10 9 16 0.556

## 7 ae_per_vis… S0007 10 9 17 0.667

## 8 ae_per_vis… S0008 10 9 16 0.333

## 9 ae_per_vis… S0009 10 10 14 0.3

## 10 ae_per_vis… S0010 10 10 17 0.2

## # ℹ 290 more rows

## # ℹ 4 more variables: mean_ae_study_med75 <dbl>, n_pat_with_med75_study <int>,

## # pval <dbl>, prob_low <dbl>Simulate Studies

sim_studies() reproduces each study a hundred times using bootstrap resampling maintaining the number of patients and the visit_med75 of each site.

set.seed(1)

plan(multisession, workers = 6)

df_sim_studies <- sim_studies(df_visit, df_site,

r = 100,

parallel = TRUE,

poisson_test = TRUE,

prob_lower = TRUE,

.progress = FALSE)

df_sim_studies## # A tibble: 30,000 × 8

## r study_id site_number visit_med75 n_pat_with_med75 n_pat_study pval

## <dbl> <chr> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 1 ae_per_visi… S0001 15 10 905 1

## 2 1 ae_per_visi… S0002 15 9 906 0.673

## 3 1 ae_per_visi… S0003 17 8 760 1

## 4 1 ae_per_visi… S0004 15 10 905 1

## 5 1 ae_per_visi… S0005 18 9 678 0.850

## 6 1 ae_per_visi… S0006 16 9 828 1

## 7 1 ae_per_visi… S0007 17 9 759 1

## 8 1 ae_per_visi… S0008 16 9 828 1

## 9 1 ae_per_visi… S0009 14 10 942 1

## 10 1 ae_per_visi… S0010 17 10 758 0.714

## # ℹ 29,990 more rows

## # ℹ 1 more variable: prob_low <dbl>Check p-value Probabilities

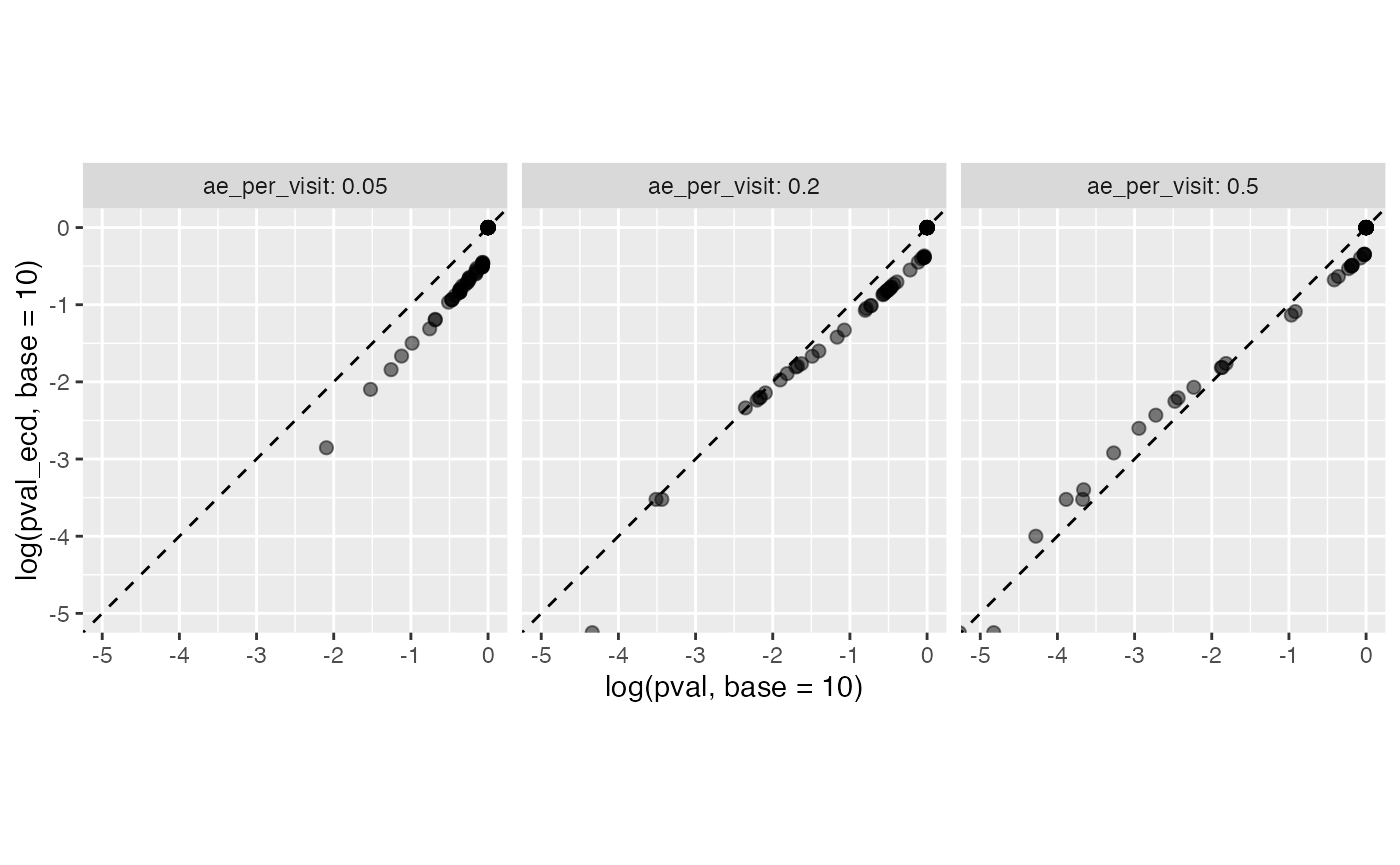

get_ecd_values() uses the p-value distribution in the dataframe returned from sim_studies() to train a empirical cumulative distribution function for each study which is then used to calculate the probability of a specific p-value or lower from the poisson.test p-value returned from sim_sites()

Note: Dots on the edge of the graph that are cut off have zero value for the corresponding axis.

df_check_pval <- get_ecd_values(df_sim_studies, df_sim_sites, val_str = "pval")

df_check_pval## # A tibble: 300 × 11

## study_id site_number n_pat n_pat_with_med75 visit_med75 mean_ae_site_med75

## <chr> <chr> <int> <dbl> <dbl> <dbl>

## 1 ae_per_vis… S0001 10 10 15 0.3

## 2 ae_per_vis… S0002 10 9 15 0.667

## 3 ae_per_vis… S0003 10 8 17 0.375

## 4 ae_per_vis… S0004 10 10 15 0.3

## 5 ae_per_vis… S0005 10 9 18 0.222

## 6 ae_per_vis… S0006 10 9 16 0.556

## 7 ae_per_vis… S0007 10 9 17 0.667

## 8 ae_per_vis… S0008 10 9 16 0.333

## 9 ae_per_vis… S0009 10 10 14 0.3

## 10 ae_per_vis… S0010 10 10 17 0.2

## # ℹ 290 more rows

## # ℹ 5 more variables: mean_ae_study_med75 <dbl>, n_pat_with_med75_study <int>,

## # pval <dbl>, prob_low <dbl>, pval_ecd <dbl>

df_check_pval %>%

ggplot(aes(log(pval, base = 10), log(pval_ecd, base = 10))) +

geom_point(alpha = 0.5, size = 2) +

facet_wrap(~ study_id) +

geom_abline(slope = 1, linetype = 2) +

coord_cartesian( xlim = c(-5,0), ylim = c(-5,0) ) +

theme(aspect.ratio = 1)

We can see that the p-values from poisson.test (x-axis) more or less match the probability with which they are represented in the resampled studies (y-axis), but there is some skewness to their relationship.

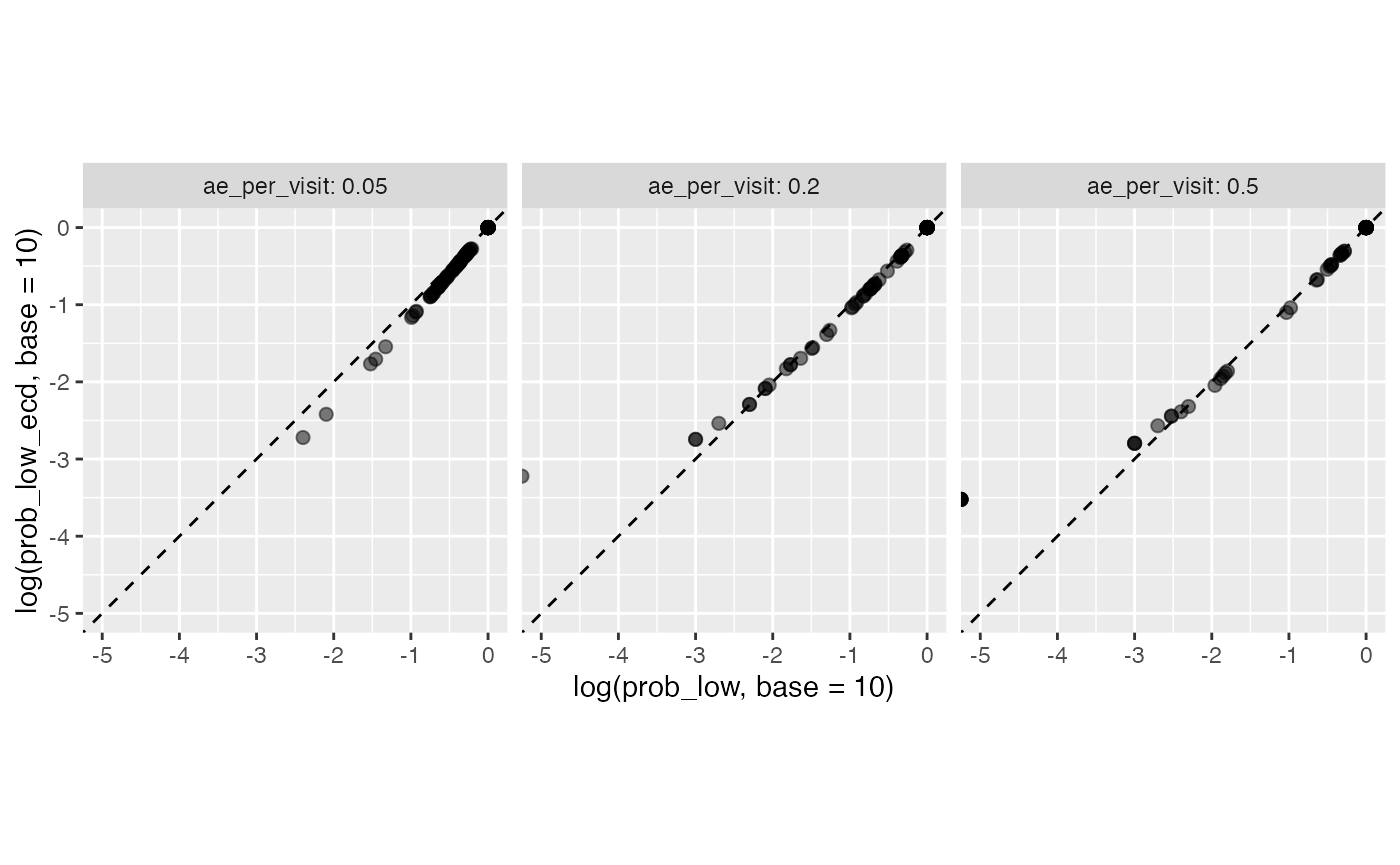

Perform Same Check on Bootstrapped Probabilities

Note: Dots on the edge of the graph that are cut off have zero value for the corresponding axis. For the tie simulations using 1000 repeats the smallest value greater zero we get for prob_low is 0.001 (1e-3).

df_check_prob <- get_ecd_values(df_sim_studies, df_sim_sites, val_str = "prob_low")

df_check_prob## # A tibble: 300 × 11

## study_id site_number n_pat n_pat_with_med75 visit_med75 mean_ae_site_med75

## <chr> <chr> <int> <dbl> <dbl> <dbl>

## 1 ae_per_vis… S0001 10 10 15 0.3

## 2 ae_per_vis… S0002 10 9 15 0.667

## 3 ae_per_vis… S0003 10 8 17 0.375

## 4 ae_per_vis… S0004 10 10 15 0.3

## 5 ae_per_vis… S0005 10 9 18 0.222

## 6 ae_per_vis… S0006 10 9 16 0.556

## 7 ae_per_vis… S0007 10 9 17 0.667

## 8 ae_per_vis… S0008 10 9 16 0.333

## 9 ae_per_vis… S0009 10 10 14 0.3

## 10 ae_per_vis… S0010 10 10 17 0.2

## # ℹ 290 more rows

## # ℹ 5 more variables: mean_ae_study_med75 <dbl>, n_pat_with_med75_study <int>,

## # pval <dbl>, prob_low <dbl>, prob_low_ecd <dbl>

df_check_prob %>%

ggplot(aes(log(prob_low, base = 10), log(prob_low_ecd, base = 10))) +

geom_point(alpha = 0.5, size = 2) +

facet_wrap(~ study_id) +

geom_abline(slope = 1, linetype = 2) +

coord_cartesian( xlim = c(-5,0), ylim = c(-5,0) ) +

theme(aspect.ratio = 1)

Here we see that the probabilities from the site simulations (x-axis) perfectly match probability with which they are represented in the resampled studies (y-axis).

Conclusion

We see that the bootstrap method gives us more accurate probabilities than the p-values derived from poisson.test even though the AE generation in the sample data follows a strict poisson process. For real clinical trial data with different types of studies for which it is not clear whether AE generation is truly based on a pure poisson process we recommend to use the bootstrap method. If poisson.test shall be used we recommend checking the applicability as we just demonstrated on clinical data set.

plan(sequential)